EKS 업그레이드

- 1탄: EKS, AddOn

- 2탄: WorkNode

- 3탄: kubectl

ㅁ 개요

ㅇ Amazon EKS 버전 업그레이드 2탄, WorkNode 업그레이드에 대해서 정리하였다.

ㅇ EKS 클러스트를 업그레이드를 완료하면, 해당 워크노드들의 EKS 버젼 업그레이드가 필요하다.

ㅇ 이전 글: Amazon EKS 버전 업그레이드, #1 EKS 클러스터

ㅁ EKS WorkNode 업그레이드를 위한 AWS 사용설명서

관리형 노드 그룹 업데이트 - Amazon EKS

관리형 노드 그룹 업데이트 관리형 노드 그룹 업데이트를 시작하면 Amazon EKS가 관리형 노드 업데이트 동작에 나열된 단계를 완료하여 자동으로 노드를 업데이트합니다. Amazon EKS 최적화 AMI를 사

docs.aws.amazon.com

ㅇ EKS WorkNode를 업그레이드를 위한 AWS 사용 설명서이다.

ㅁ EKS WorkNode 버젼 확인

# eksctl를 통한 workNode 조회

$ eksctl get ng --cluster k8s-peterica

CLUSTER NODEGROUP STATUS CREATED MIN SIZE MAX SIZE DESIRED CAPACITY INSTANCE TYPE IMAGE ID ASG NAME TYPE

k8s-peterica work-nodes ACTIVE 2022-09-19T12:53:07Z 1 2 1 t3.medium AL2_x86_64 eks-work-nodes-3ac1aae7-96b0-a054-d0e2-e4cfab4b1094 managed

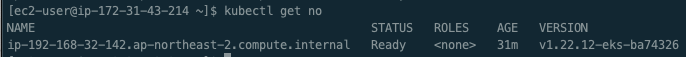

# kubectl를 이용한 eks 버젼확인

$ kubectl get no -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

ip-192-168-27-28.ap-northeast-2.compute.internal Ready <none> 7m44s v1.21.14-eks-ba74326 192.168.27.28 3.35.24.209 Amazon Linux 2 5.4.209-116.367.amzn2.x86_64 docker://20.10.17ㅇ workNode의 eks 버젼이 1.21임을 확인 할 수 있다.

ㅇ eks을 업그레이드를 하기 위해서는 workNodeGroup의 AMI_ID를 변경해야 한다.

ㅇ eks버전에 따른 AMI 아이를 찾아야한다.

ㅁ Amazon EKS 최적화 Amazon Linux AMI 버전

ㅇ 이 곳에서 다음과 같은 표의 링크를 통해 Kubernetes 버전에 맞는 AMI ID를 찾아 볼 수 있다.

ㅇ 서울의 AMI ID 보기를 클릭하면 아래의 그림으로 이동한다.

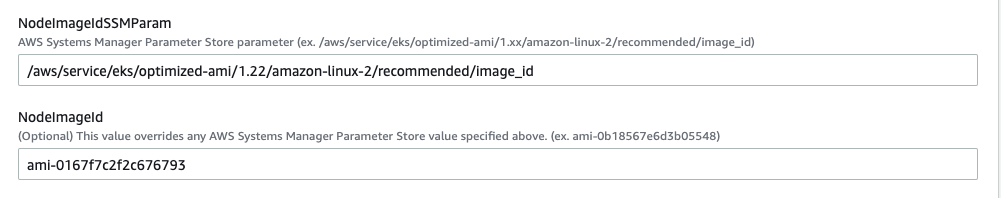

ㅇ EKS 1.22에 최적화된 ami-0167f7c2f2c676793 값을 찾았다.

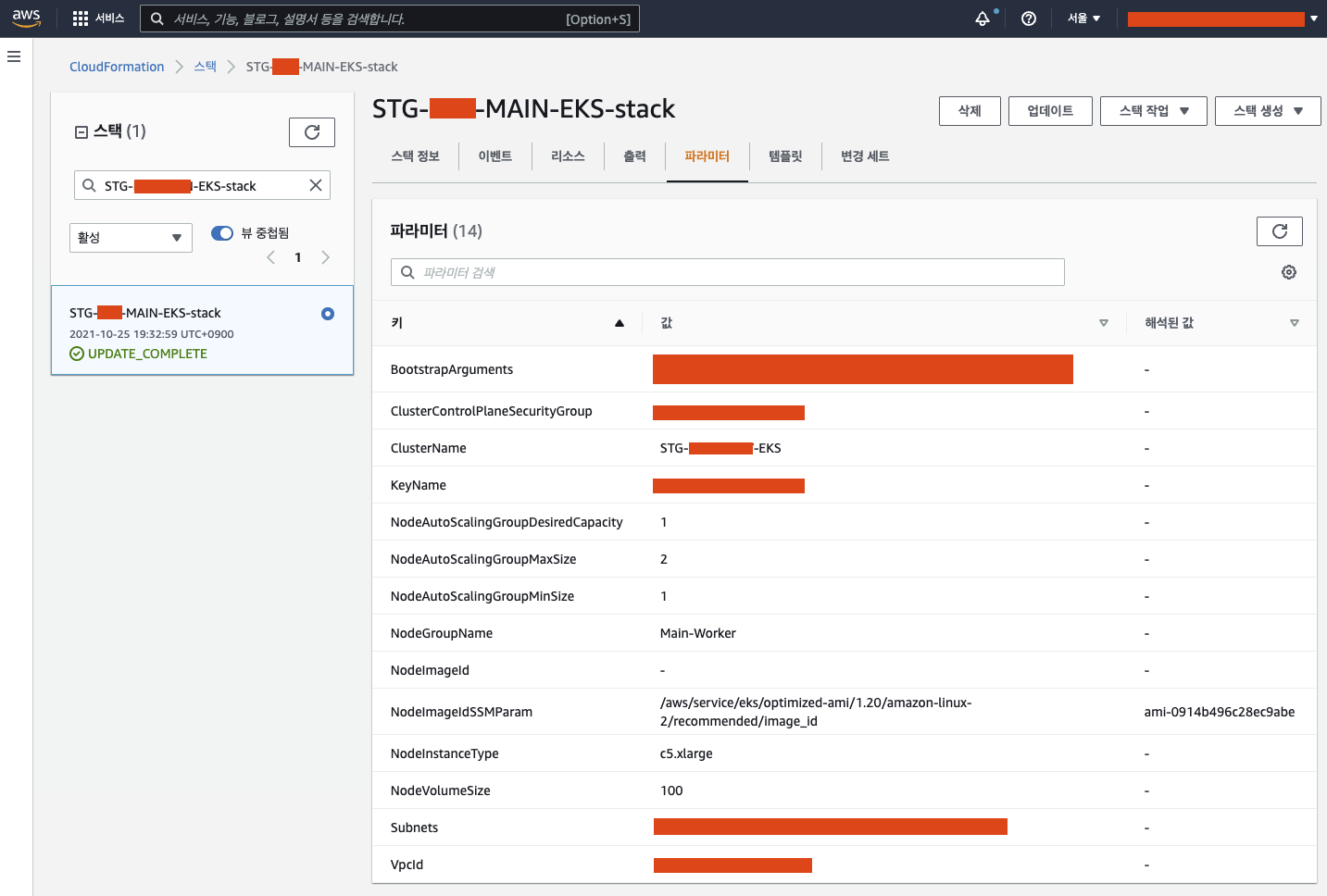

ㅁ CloudFormation 스택 수정

ㅇ 현재 검수기의 스택 파라미터 정보이다.

ㅇ 수정이 필요한 부분은 NodeImageIdSSMParam 부분이다.

ㅇ 스택을 현재 템플릿 기준으로 업데이트를 하면 스택 세부 정보 지정 페이지로 이동한다.

ㅇ 여기서 NodeImageId를 ami 기준으로 꼭 넣어줘야한다.

ㅇ NodeImageIdSSMParam으로만 할 경우 NodeImageId가 변경될 수도 있기 때문에 이런 경우 검증 절차를 거치지 않은 node 인스턴스가 작동할 수 있기 때문이다.

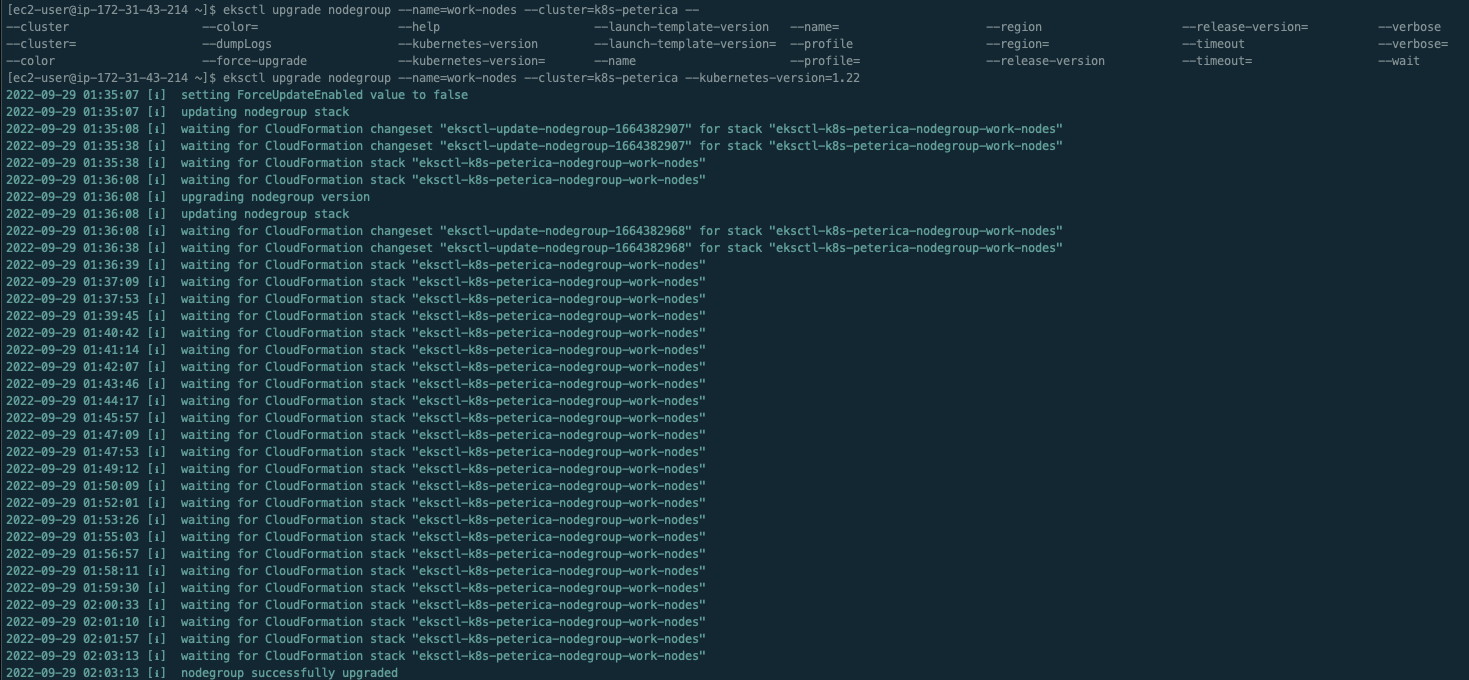

ㅁ EKSCTL 명령어를 통한 WorkNode EKS버젼 업그레이드

eksctl upgrade nodegroup --name=work-nodes --cluster=k8s-peterica --kubernetes-version=1.22ㅇ 테스트용으로 만든 EKS의 Cloudformation에는 파라미터를 설정할 수가 없다.

ㅇ 그래서 eksctl로 버젼을 지정하여 workNode의 eks 버젼을 업그레이드하였다.

ㅇ 교체작업은 새로운 node를 띄우고 rolling upgate 형식으로 진행되어 30분정도 걸렸다.

ㅇ 빠른 작업을 위해서는 사전에 autoSacle 구룹의 node 갯수를 0으로 바꾸는 것이 좋겠다.

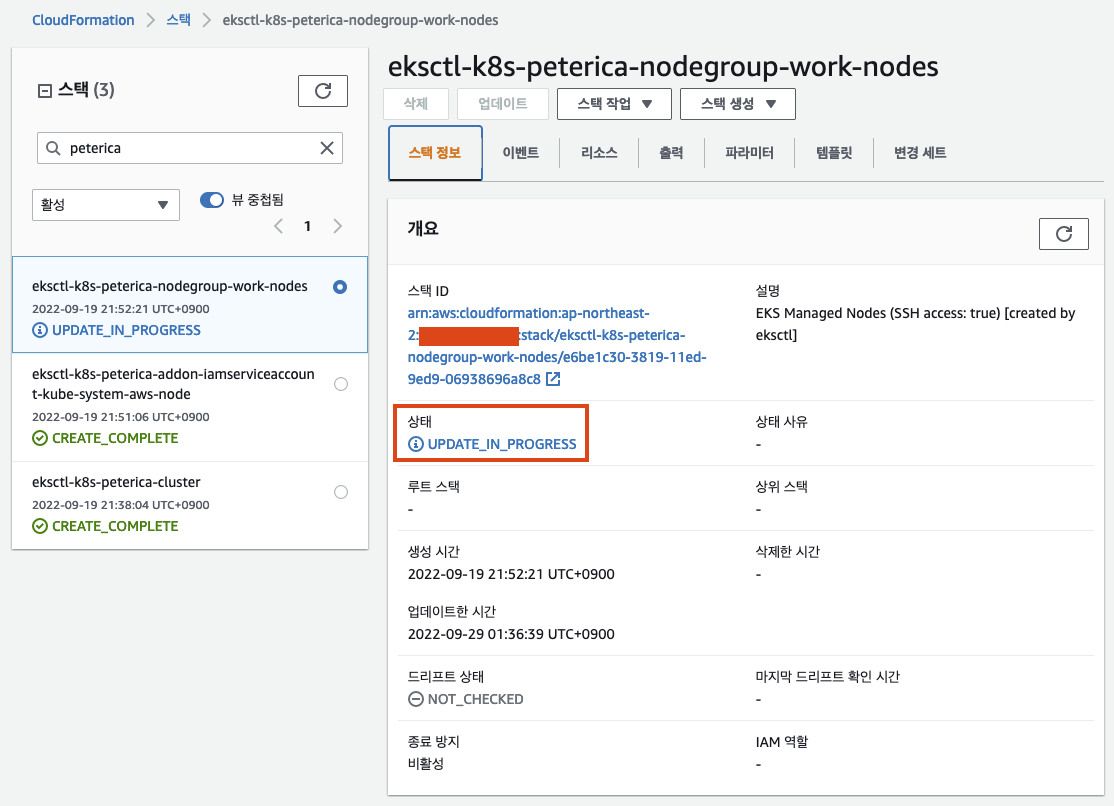

ㅇ 실행하자 상태가 UPDATE_IN_PROGRESS로 변경되었다.

ㅁ 실질적으로 Node 교체과정 확인

[ec2-user@ip-172-31-43-214 ~]$ kubectl get no -w

NAME STATUS ROLES AGE VERSION

ip-192-168-27-28.ap-northeast-2.compute.internal Ready <none> 49m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready <none> 50m v1.21.14-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 1s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 2s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 4s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 16s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 31s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady <none> 3s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady <none> 15s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 20s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 30s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady <none> 1s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady <none> 2s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady <none> 14s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 20s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 22s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 30s v1.22.12-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready <none> 55m v1.21.14-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady <none> 4s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady <none> 10s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady <none> 14s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 20s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 5m32s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 24s v1.22.12-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 56m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 56m v1.21.14-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 30s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 2m31s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 60s v1.22.12-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 58m v1.21.14-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 5m31s v1.22.12-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 59m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 60m v1.21.14-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 6m46s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 6m47s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 6m47s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 10m v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 7m32s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 6m1s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 8m33s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 8m56s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 10m v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 7m55s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 7m56s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 7m56s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 11m v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 9m35s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 9m59s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 15m v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 14m v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 14m v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 15m v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 20m v1.22.12-eks-ba74326ㅇ kubectl get no -w 명령어를 통해 Node의 상황을 모니터링하였다.

ip-192-168-27-28.ap-northeast-2.compute.internal Ready <none> 49m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready <none> 55m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 56m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 58m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 59m v1.21.14-eks-ba74326

ip-192-168-27-28.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 60m v1.21.14-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady <none> 15s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready <none> 20s v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 14m v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 15m v1.22.12-eks-ba74326

ip-192-168-85-168.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 15m v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady <none> 14s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 20s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready <none> 6m46s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 7m32s v1.22.12-eks-ba74326

ip-192-168-82-87.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 8m56s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady <none> 14s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready <none> 20s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal Ready,SchedulingDisabled <none> 7m56s v1.22.12-eks-ba74326

ip-192-168-5-27.ap-northeast-2.compute.internal NotReady,SchedulingDisabled <none> 9m59s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 0s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal NotReady <none> 16s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 21s v1.22.12-eks-ba74326

ip-192-168-32-142.ap-northeast-2.compute.internal Ready <none> 20m v1.22.12-eks-ba74326ㅇ 위의 내용을 아이피별로 정리해 보았다.

ㅇ 신규노드가 생성되고, 기존의 노드가 바로 교체되는 것이 아니라 스케줄에서 빼놓은 상태에서 10분을 기다려 주었다.

ㅇ 현재는 192.168.32.142 하나의 노드만 가동 중이다.

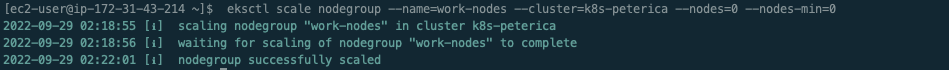

ㅁ 테스트를 마치고 노드 정리

[ec2-user@ip-172-31-43-214 ~]$ eksctl scale nodegroup --name=work-nodes --cluster=k8s-peterica --nodes=0 --nodes-min=0

2022-09-29 02:18:55 [ℹ] scaling nodegroup "work-nodes" in cluster k8s-peterica

2022-09-29 02:18:56 [ℹ] waiting for scaling of nodegroup "work-nodes" to complete

2022-09-29 02:22:01 [ℹ] nodegroup successfully scaled

'[AWS-RDP] > EKS' 카테고리의 다른 글

| [중요2][EKS] Amazon EKS 버전 업그레이드, #3 kubectl 설치 또는 업데이트 (69) | 2023.12.13 |

|---|---|

| [중요2][EKS] Amazon EKS 버전 업그레이드, #1 EKS 클러스터 (66) | 2023.12.13 |

| [중요] Amazon EKS 관리형 추가 기능을 생성하거나 업데이트할 때 구성 충돌을 방지하려면 어떻게 해야 합니까? (66) | 2023.12.12 |

| [참고] Amazon VPC CNI plugin for Kubernetes Amazon EKS 추가 기능을 사용한 작업!! (56) | 2023.12.12 |

| [참고] Provisioning AWS EKS with Terraform !! (38) | 2023.12.11 |

| [EKS] kubernetes pods 에서 curl 테스트 해보기!! (0) | 2023.09.07 |

댓글